The IRT Saint Exupéry has made critical embedded systems one of its areas of expertise. In a previous article, we explained why research activities in the field of software are essential, particularly in the aerospace and space sectors.

We continue our series of content dedicated to this research theme by highlighting the importance of system timing analysis. This is also an opportunity to shed light on the work carried out by IRT Saint Exupéry over the past ten years on this topic.

Faster…

The performance and complexity of processing platforms evolve in parallel with the software they execute. This process has a natural tendency to accelerate, as any increase in performance is immediately leveraged to introduce new functional capabilities, which in turn demand additional processing power.

Until recently, the regular increase in processor clock frequency was a major driver of performance improvements. This model was particularly advantageous for developers, as it provided nearly “free” performance gains—simply porting an application to new hardware was enough to achieve better performance.

However, this model has now reached its limits

These limits are physical, primarily dictated by the ability to dissipate the heat generated by ever-faster, smaller, and more numerous transistors on the same silicon surface. To overcome these constraints and continue improving processing speeds, the current approach relies on using highly sophisticated processing units, increasing their number, and enhancing their specialization.

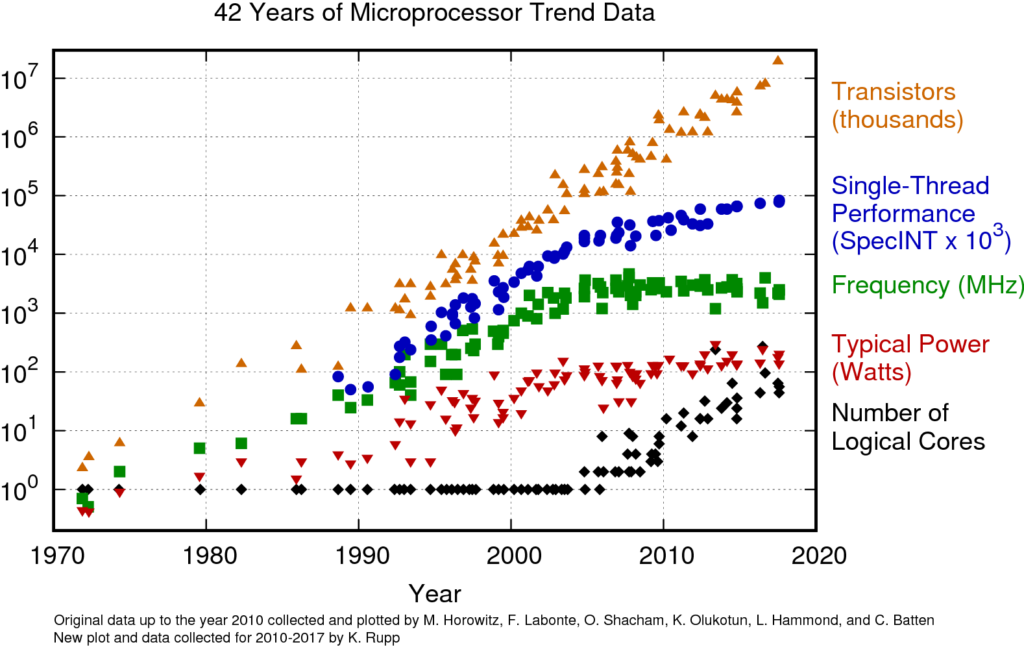

The illustration below clearly depicts this evolution: while the number of transistors continues to follow an exponential trajectory, the clock frequency curve has reached an asymptote since the mid-2000s. It has now been “overtaken” by the number of cores, which continues to grow steadily.

(extract from https://www.karlrupp.net/2018/02/42-years-of-microprocessor-trend-data/ )

While this evolution transformed the market more than a decade ago, it continues to present significant challenges in the field of real-time critical systems. In the consumer market—which ultimately drives the microprocessor industry—the priority is “user experience,” meaning the performance as perceived by the end user. In this context, meeting deadlines 99% of the time is considered sufficient.

The objective is achieved even if these same timing constraints are occasionally violated; from an economic standpoint, the resulting performance remains acceptable. Reducing the remaining 1% of out-of-specification cases would likely require disproportionate effort for a minimal economic return.

Specificity of the Aerospace Sector:

Conversely, this approach is incompatible with the requirements of the real-time critical systems domain. In this field, missing a deadline is considered a failure that can have potentially catastrophic consequences.

Faster, but on time…

The behavior of a real-time embedded system is, by definition, tied to the passage of physical time. For example, it must take position measurements at precise time intervals to estimate speed or acceleration, or it must generate actuator commands for braking or steering to avoid a collision. Sometimes, the timing constraint is more subtle and involves more abstract considerations, such as preserving the causality relationship. In all cases, mastering time does not simply mean “going faster,” but rather ensuring and demonstrating that actions occur at the right moment.

In general, real-time systems are classified based on the impact of a missed deadline on the service they provide. In a hard real-time system, any delay in service delivery is considered a failure, which can be catastrophic depending on the context—think of an airbag deployment mechanism, an emergency braking system in a train, or an automotive engine ignition control system.

Conversely, in a soft real-time system, missing a deadline results in degraded service, where the value of the service decreases progressively with the delay.

In all cases, time is a critical concern in the development of a real-time system. The service must be delivered quickly to ensure the system’s functional performance (e.g., maintaining the stability of a control loop or deploying an airbag in time) and must also be provided at the precise moment it is expected. These two objectives are interconnected but distinct.

At first glance, meeting timing deadlines and maximizing speed may seem like converging objectives. It is therefore common to associate the term real-time with the idea of speed. However, being real-time primarily means adhering to strict timing constraints, regardless of their magnitude—whether in the microsecond, second, or even minute range. Moreover, it is not only necessary to meet these deadlines but also to demonstrate, with certainty, that they are met in all operational scenarios.

In practice, chip designers face two technically conflicting requirements: computing fast, which involves implementing complex and difficult-to-control hardware mechanisms, and computing on time, which—on the contrary—calls for simple and predictable mechanisms. In other words, increasing computation speed requires greater parallelism and more complex execution structures, ultimately making it harder to guarantee that deadlines will always be met.

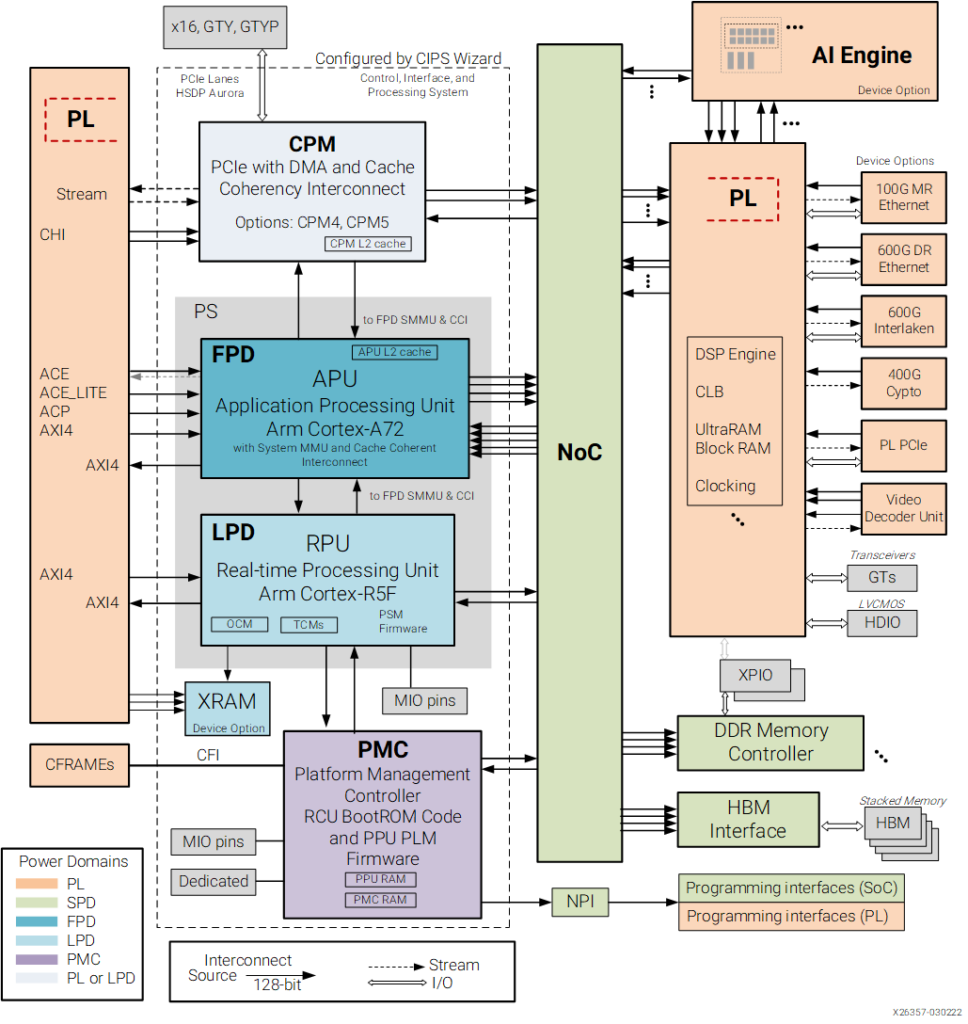

For a long time, a highly conservative approach was favored: parallelism was kept to a strict minimum, and when introduced, the level of concurrency remained low. However, this stance is no longer viable today for two main reasons. First, simple execution platforms are becoming increasingly rare. The clear trend is toward integrating more and more cores, as well as programmable logic, onto a single chip (e.g., the architecture of Xilinx’s VERSAL component, as shown in the figure below). Second, the growing complexity of applications, including critical ones, continuously demands new computing capabilities. A notable example is advanced driver assistance systems (ADAS) in the automotive sector.

Worst execution times and interference analysis

A real-time system is thus subject to strict timing constraints, such as response times, throughput, and data freshness. Ensuring and proving that these constraints are met is a complex challenge that requires expertise in theoretical computer science as well as hardware and software engineering.

Over the past fifteen years, this complexity has significantly increased with the emergence of computing platforms integrating various forms of hardware parallelism: multiple and hybrid processing cores, hardware accelerators (SIMD units, GPUs, AI accelerators), and so on.

Development, verification, validation, and certification practices (e.g., CAST 32 or AMC 20-189 in the aerospace sector) have had to evolve to maintain the ability to guarantee that timing constraints are met.

Let’s take the example of Worst-Case Execution Time (WCET) analysis. In the past, simple and empirical methods, such as the high-water mark technique (which measures the maximum execution time from a test set), were widely used. Today, much more sophisticated approaches are employed, such as abstract interpretation or rare event statistics.

The response time compliance of a system, as well as its latency, must be guaranteed from the design phase and verified with the same rigor as the results produced by the system. When the system is fully or partially implemented in software, demonstrating its “real-time properties” involves estimating the Worst-Case Execution Times (WCET) of the software components. WCET represents the upper bound of the execution times of observable functions in operation.

On simple hardware architectures, where the execution time of an instruction depends only on the instruction itself and not on the state of the processor at the time of execution, estimating WCET is conceptually straightforward. It is sufficient to identify the execution path whose total duration, calculated as the sum of the durations of each instruction, is maximal.

However, this task is not easy to accomplish for complex software, as all execution paths must be considered. Fortunately, much of this can be automated. The difficulty increases further with more complex architectures, where the execution time of an instruction varies depending on the execution context. This dependency between execution time and processor state arises from several sources, most of which are related to features introduced in processors to improve the average execution time of software. These include branch prediction mechanisms, speculative execution, and caches.

With all the subtle and complex microarchitecture mechanisms implemented in high-end processors, estimating WCET with the level of confidence required by critical systems becomes a major challenge. But the difficulties don’t stop there. When considering not just a single processing unit or core, but platforms composed of several such units, the problems multiply.

Each of these units may run distinct software on private hardware resources (pipelines, memory, etc.), but they generally share some hardware resources. This sharing leads to contention phenomena and, more broadly, dependencies of various kinds, which are sources of temporal interference.

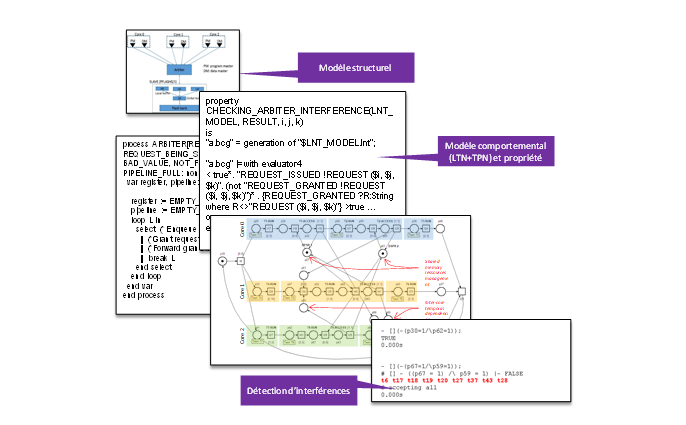

Why is accounting for these interferences a major challenge for timing analysis?

- Identifying sources of interference: It is necessary to catalog all the possible ways in which the execution of code on one core can affect the execution time of code on another core. Ensuring the completeness of this analysis is a challenge due to the sophistication of the architectures and, at times, the lack of documentation.

- Estimating the effects of interferences: Once identified, the interferences must be evaluated and bounded, avoiding overly pessimistic estimates.

- Combining results: The results from the WCET analysis “in isolation” must be combined with those from the interference analysis to obtain an estimate of the WCET “under contention conditions.

A significant part of the work carried out by the CSEC team at IRT Saint Exupéry has focused—and continues to focus—on the temporal analysis of systems, particularly to ensure the meeting of deadlines.

Over the years and through various projects, we have addressed many aspects of the problem:

- Micro-architecture: we have worked on the implementation of microarchitectures that facilitate temporal analysis (e.g., work on the RISC-V FlexPret architecture developed by Berkeley), on interference analysis (e.g., work on the PhyLog tool and approach from ONERA), and more.

- Software: we have conducted research on Worst-Case Execution Time (WCET) analysis using abstract interpretation techniques (with IRIT on the OTAWA tool) and empirical methods (with ONERA and RocqStat), as well as collaborating with Asterios Technologies and Rapita Systems on code instrumentation. We have also explored the implementation of synchronous languages (Lustre and ForeC, with INRIA).

- System: we have worked on implementing the synchronous model of Asterios at the scale of a distributed system.

- Network: our work has also included the analysis and configuration of communication networks based on Time-Sensitive Networking (TSN) technology.

Ultimately, all these efforts converge toward a single goal: enabling the implementation of increasingly complex functions while fully utilizing growing processing capabilities and ensuring the required temporal properties for our systems.

Would you like to go further?

W.-T. Sun, E. Jenn, and H. Cassé, “Build Your Own Static WCET analyser: the Case of the Automotive Processor AURIX TC275“, presented at the 10th European Congress on Embedded Real Time Software and Systems (ERTS 2020), Toulouse, France, Jan. 2020,

https://hal.science/hal-02507130v1/document

Damien Chabrol, Jean Guyomarc’H, Fabien Siron, Guillaume Phavorin, Sam Thompson, et al.. “Separation of functional and time interference concerns for efficient AMC 20-193 compliance.“ ERTS2024, Jun 2024, Toulouse, France.

https://hal.science/hal-04649192v1/file/ERTS24-paper%20final.pdf

Damien Chabrol, Jean Guyomarc’H, Fabien Siron, Guillaume Phavorin, Sam Thompson, et al.. “Separation of functional and time interference concerns for efficient AMC 20-193 compliance.“ ERTS2024, Jun 2024, Toulouse, France.

https://hal.science/hal-04649192v1/file/ERTS24-paper%20final.pdf

To find all our articles dedicated to this topic:

- Item 1: “Towards safer software and hardware systems “

- Item 2: “Time analysis of embedded systems”

- Item 3: “From multitude to harmony: programming for new platforms”