key competence

AI for critical systems

Recent progress in Artificial Intelligence, especially in Machine Learning, has aroused unprecedented interest in these technologies. Many industrial sectors are now considering using them. However, this has led to strong scientific obstacles.

Machine learning, especially deep neural networks, can perform well enough to consider critical applications such as autonomous vehicles, predictive maintenance, and medical diagnosis, but their theoretical properties are not well-known yet.

These scientific challenges make it difficult to meet the industrial constraints required for a general application such as certification, qualification, and explainability of algorithms. To this end, our purpose is to create knowledge on:

- Standards, regulations: how to determine new certification guidelines that will increase confidence in complex and adaptive systems;

- Defining the algorithmic and mathematical challenges of integrating machine learning (including neural networks) algorithms in critical systems

- Developing new algorithms and mathematical frameworks with improved properties regarding certification and qualification

- Development process (design pattern) and incremental maintenance/correction of AI-based systems;

- Embedding AIs: quantifying/reducing neural networks implementation, assessing performances, regarding specific infrastructures/material targets.

R&T fields

REMOVING BIAS AND SENSITIVE INFORMATION FROM DATASETS IN MACHINE LEARNING

Propose and demonstrate solutions where decisions are not influenced by bias (Unknown and Known), which could cause unfair Machine Learning systems, thus non-dependable solutions.

We define frameworks to understand the effects of the distribution of the learning sample in the Machine Learning process. This guarantees anomaly detection, robust efficient algorithms resilient to modifications of their environment, possibly taking benefit of multiple inputs (transfer learning or consensus learning), or being able to detect missing learning data that could be required to protect themselves from adversarial conditions.

THEORETICAL GUARANTEES FOR GENERALIZATION

The neural networks have demonstrated an impressive capability of generalization despite a high number of parameters. This capacity is still poorly understood from the theoretical point of view so it is not possible to ensure it in any way.

We define new methods, conditions, and tools to have more guarantees on the behavior of neural networks. These new methods allow under given assumptions to have better confidence in the robustness of the deep learning algorithms while proposing several indicators. Those could be used to persuade the competent authorities during the certification process.

ROBUSTNESS VIA DETECTION AND ADAPTATION TO UNKNOWN DATA

The whole machinery of Machine Learning technics relies on the assumption that the learning sample conveys all the information in the data. Hence the algorithm is only able to learn what is observed in the data used to train itself. So, no novelty can be forecast using standard methods, while in practice new behavior may appear in the observed data.

We define and develop tools to detect unknown observations, and to be able to these new observations to update and increase the capacity of the algorithms (including deep neural networks).

EXPLAINABILITY

Machine Learning algorithms are designed to assist experts by giving access to valuable predictions and even tend to replace human decisions in many fields, achieving extremely good performance. Over the last decades, the complexity of such algorithms has grown, going from simple and interpretable prediction models based on regression rules to very complex models such as random forest, gradient boosting, and models using deep neural networks. Such models are designed to maximize the accuracy of their predictions at the expense of the interpretability of the decision rule. Up to now, experts still are to define how the information is processed to obtain a prediction, which explains why such models are widely considered black-box models.

Here, we define and develop multiple types of tools:

- for the data scientists to take into account the explainability constraints;

- for the detection of unknown observations, for them to update and increase the capacity of the algorithms (including deep neural networks).

We also define metrics that allow an objective assessment of the explaining algorithms’ performances.

Our offer

- State-of-the-art, technical syntheses

- Specific toolboxes

- Demonstrators and prototypes

- Know-how

- Databases and models

- Contributions to the Technological Readiness Level (TRL)

- Theses reports, scientific publications, conferences

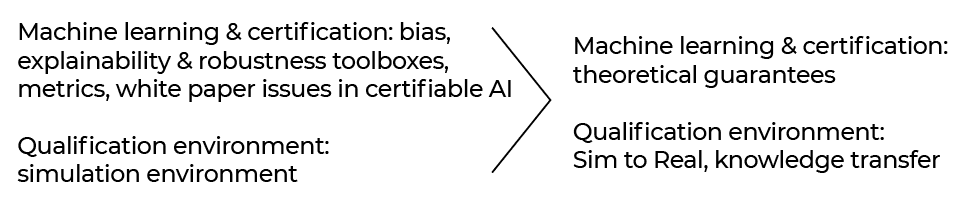

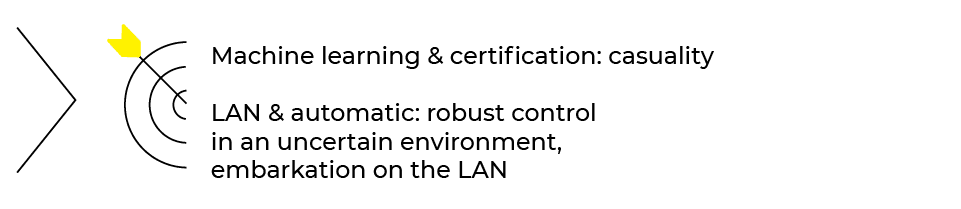

ROADMAP

2019 > 2025

contact us

Contact our platform experts team